In the rapidly evolving landscape of artificial intelligence, a new protocol is making waves: the Model Context Protocol (MCP). Introduced by Anthropic in late 2024, MCP is poised to revolutionize how Large Language Models (LLMs) interact with external tools and data sources, enabling more capable and versatile AI assistants.

What is MCP?

The Model Context Protocol (MCP) is an open standard designed to bridge AI models with external data and services. It allows LLMs to make structured API calls in a consistent, secure way, facilitating seamless integration with various tools and applications .

Think of MCP as the “USB-C” for AI applications: a universal connector that standardizes how AI models access and interact with external resources .

The Evolution of LLMs and the Need for MCP

Initially, LLMs like GPT-3 were limited to generating text based on input prompts. They couldn’t perform actions like sending emails or fetching real-time data. To bridge this gap, developers began integrating LLMs with external tools, allowing them to perform specific tasks. However, this integration was often cumbersome, requiring custom solutions for each tool.

MCP addresses this challenge by providing a standardized protocol, simplifying the process of connecting LLMs with various tools. This not only streamlines development but also ensures consistency and reliability across different integrations .

How Does MCP Work?

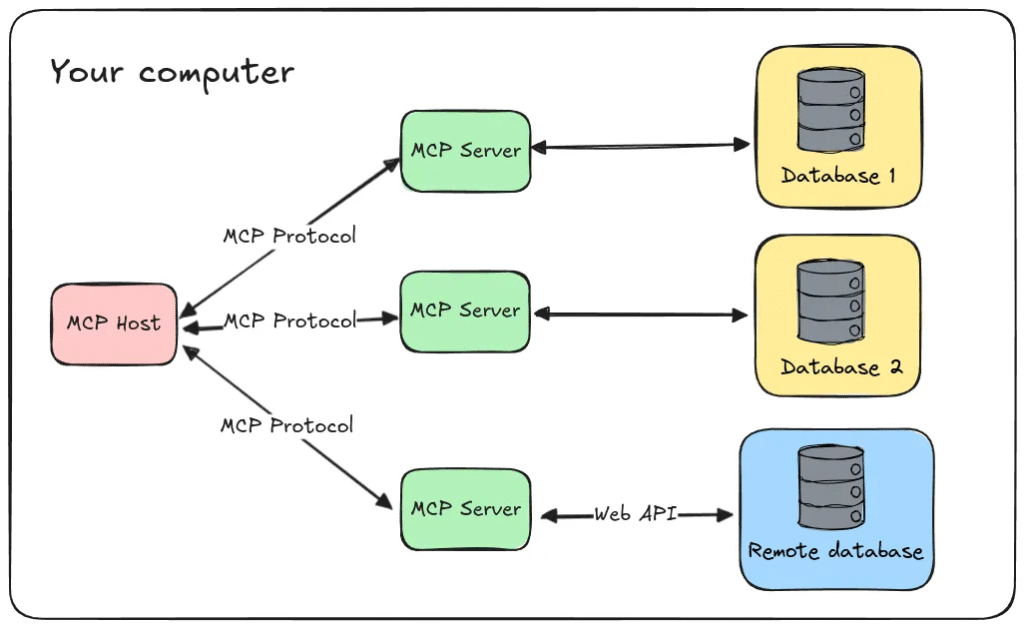

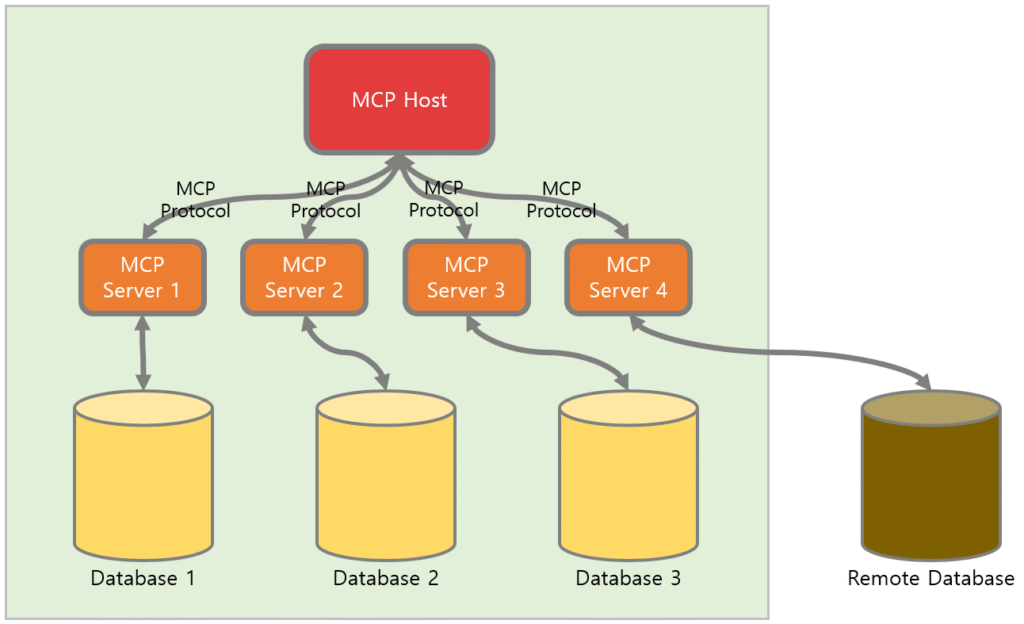

The MCP ecosystem comprises four main components:

- MCP Client: Interfaces like Tempo, Wind, Surf, and Cursor that interact with the LLM.

- MCP Protocol: The standardized communication protocol facilitating interactions between clients and servers.

- MCP Server: Managed by service providers, it translates the capabilities of external tools into a format understandable by the LLM.

- Service: The external tool or application (e.g., a database or API) that the LLM interacts with.

This architecture ensures that LLMs can access and utilize external services efficiently, without the need for bespoke integrations for each tool .

Real-World Applications of MCP

MCP has been applied across a range of use cases in software development, business process automation, and natural language automation:

- Software Development: Integrated development environments (IDEs) such as Zed, platforms like Replit, and code intelligence tools such as Sourcegraph have integrated MCP to give coding assistants access to real-time code context .

- Enterprise Assistants: Companies like Block use MCP to allow internal assistants to retrieve information from proprietary documents, customer relationship management (CRM) systems, and company knowledge bases.

- Natural Language Data Access: Applications like AI2SQL leverage MCP to connect models with SQL databases, enabling plain-language information retrieval.

- Desktop Assistants: The Claude Desktop app runs local MCP servers to allow the assistant to read files or interact with system tools securely.

- Multi-Tool Agents: MCP supports agentic AI workflows involving multiple tools (e.g., document lookup + messaging APIs), enabling chain-of-thought reasoning over distributed resources.

Startup Opportunities with MCP

The introduction of MCP opens up a plethora of opportunities for startups and developers:

- MCP App Store: A centralized platform where developers can publish and discover MCP-compatible tools and services.

- Integration Services: Offering services to help businesses integrate their tools with MCP, ensuring compatibility and optimal performance.

- Monitoring and Analytics: Developing tools to monitor MCP interactions, providing insights into performance and potential areas of improvement .

As MCP adoption grows, these opportunities are likely to expand, fostering a vibrant ecosystem of AI-powered applications and services.

Challenges and Considerations

While MCP offers numerous advantages, it’s essential to acknowledge potential challenges:

- Standardization: As with any protocol, widespread adoption is crucial. Competing standards or lack of consensus can hinder MCP’s effectiveness.

- Security: Ensuring secure interactions between LLMs and external tools is paramount. Proper authentication and authorization mechanisms must be in place.

- Performance: Integrations should be optimized to prevent latency or performance bottlenecks.

Addressing these challenges will be critical to realizing MCP’s full potential and ensuring its long-term success.

Conclusion

MCP represents a significant step forward in the evolution of AI assistants. By standardizing the way LLMs interact with external tools, it paves the way for more capable, efficient, and versatile AI applications. As the ecosystem matures, we can expect a surge in innovative solutions built upon the MCP framework, revolutionizing the way we interact with technology.

Whether you’re a developer, entrepreneur, or AI enthusiast, understanding MCP and its implications is essential in navigating the future of AI integration and application development.